Facebook’s Project Aria and Gemini

The last I wrote on AR, we had gotten a little peak into Apple’s augmented reality plans via some rumors and patent filings. The other company that is very hot on VR and AR is Facebook, who conveniently owns Oculus, the most popular VR headset. What you see in the image above is a device that Facebook employees have been using for data collection called Gemini. To be clear, these clunky units are not AR glasses, they are merely data collectors.

What Facebook hopes to accomplish with these is to learn what they need to do to build real AR glasses. About a year ago they announced their AR testing program called Project Aria, and Gemini is the first public signs of what they are up to. Obviously, like Apple, they are at very early stages here. The Apple rumors indicated we wouldn’t see anything from them until 2025 at the earliest. Facebook is likely on a similar schedule, unless they have much smaller ambitions for the first commercial product.

But the reason Facebook and Apple are so hot on AR is because they are trying to invent the first new computer interface since graphical interfaces were invented in the 1970s.

Each computing platform becomes more ubiquitously accessible and natural for us to interact with. While I expect phones to still be our primary devices through most of this decade, at some point in the 2020s, we will get breakthrough augmented reality glasses that will redefine our relationship with technology...

Even though some of the early devices seem clunky, I think these will be the most human and social technology platforms anyone has built yet.

-Mark Zuckerberg, January 9, 2020

That last sentence echos Tim Cook’s sentiments:

The reason I'm so excited about AR is I view that it amplifies human performance instead of isolates humans. And so as you know, it's the mix of the virtual and the physical world and so it should be a help for humanity, not an isolation kind of thing for humanity.

-Tim Cook, November 2, 2017

The idea here is we spend all this time staring at our phones and other screens, and this dissociates us from the real world. AR glasses, mixing the real world with a computer-generated one, place us back in the real world. It is a new human interface paradigm, the first new one in a long time.

Compared to Apple, Facebook brings a lot to the table, but is also missing key pieces, notably a phone, an operating system, chip design, a sensor tech stack, and most important, on-device voice recognition, AKA Siri.

Defining Our Terms

VR, or Virtual Reality, is a system that feeds the user an entirely computer generated environment via a headset and usually a glove or other handset for control. Everything the user sees and hears is computer generated. The best current example is Facebook's Oculus headset.

AR, or Augmented Reality, mixes computer generated elements with the real world. This can be a heads-up display, or computer generated objects that are situated in a real world environment and move with it. The example that many will be familiar with is Pokemon Go.

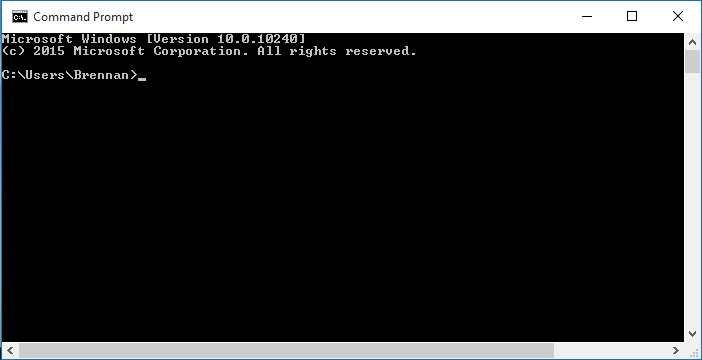

Interfaces

That original IBM punched card was invented in 1928 and was still in wide use in almost the same form in the 1980s. By then command line interfaces had overtaken the punched card. Command line still exists in some form in every computer operating system.

Invented at Xerox in the 1970s, the first successful commercial product with a graphical interface was the Mac in 1984, followed soon by Windows. In 2007, iPhone replaced the mouse with a finger, but the basic graphical metaphor has remained unchanged.

The New Interface Paradigm

AR is one half of the new interface paradigm. In current interfaces, we input with a mouse/pad, keyboard or touch. We get feedback from the display, sound and haptics. AR replaces the graphical display with the real world mixed with computer generated elements like heads-up displays and computer generated objects seamlessly laid into the three dimensional world.

Here is where Facebook’s primary work has been, and this is the outgrowth of their Oculus purchase. Oculus is a VR headset, mostly for gaming, which is a much more limited market than the ambitions for AR. But it gives them a lot of experience in developing systems around sound and vision, especially the hardware part where they need the most help.

It also gives them an installed base of devices, though not terribly large, once they want to roll out features. They have already given developers APIs to program AR experiences in the Oculus Quest 2, and it looks like more may be coming with the upcoming Oculus Quest 3. Inasmuch as they can get Oculus users to use new AR apps or games, that is a source of data towards the AR glasses like Gemini. They already reached a key milestone with Quest 2, which was untethering it from another device.

The other half is voice control, and Facebook is busy at work here. Their primary work here is with AI systems that don’t have to be trained in new languages with human transcriptions. They are also paying people for their voice recordings to train it.

Where Gemini Fits In

Gemini is an indication of where Facebook is on AR, which is near the beginning like Apple. Even without any actual AR hardware in there, these are clunky beasts, as Zuckerberg admitted, and there’s a lot of work in miniaturization to be done.

In augmented reality, you're going to really need a pair of glasses that look like normal looking glasses in order for that to hit a mainstream acceptance. And that, I think, is going to be one of the hardest technical challenges of the decade. It's basically fitting a supercomputer in the frame of glasses

-Mark Zuckerberg, April 28, 2021

These are very thick plastic frames that like Quest are on Qualcomm’s (QCOM) Snapdragon platform. They have a variety of sensors, and four cameras, which also seem to be the same as Quest’s. So this is a hugely pared down Quest 2, in effect, that only gathers data. The sensors are constantly logging data (there is an off switch for that), but the cameras have a shutter button and record all four streams at once.

What Facebook is up to here is learning. How are people using these things? Are they able to keep them on for long periods? What missing data streams will we need to make this work? This will add to what they are learning via the AR features in Quest 2 and soon Quest 3.

If you haven’t already guessed, this is a very long-term and expensive project where progress is measured in millimeters. There is a reason that only two companies are really investing very seriously in it, Microsoft Hololens and Google Glass notwithstanding.

Facebook’s Play and The Metaverse

There are no do-overs in the smartphone business

This is part of a big strategic play for Facebook to succeed with a hardware/software/services product in a way they could not in 2013 with the HTC First with Facebook Home, AKA the Facebook phone. However, the question remains: will people reject having Facebook being that persistent in their lives like they did resoundingly in 2013?

Facebook and Apple are pursuing the same goal in one way: they are trying to extend what they do very well into a new device and interface. Apple wants to translate the iPhone experience to one where you remain much more engaged with your surroundings. Facebook wants for you to always be on Facebook, just as the Facebook phone was always on Facebook. This is the metaverse.

The metaverse is a word many are bandying about lately. The idea is to create complex virtual worlds like games, except that everything can happen there: meetings, live events, conventions, retail, and also games of course. It is a combination of persistent virtual worlds and the real world interacting - the world of the film Ready Player One, but hopefully less awful. The two main games companies working on this are Epic with Fortnite and Roblox.

We got a taste of Facebook’s metaverse in a recent presentation and interview with CBS This Morning:

I don’t think much of Facebook Horizon Workrooms. In the first place, no one at Facebook has explained what problem it solves. Right now it is just Zoom with cartoony avatars. An interactive demo where Zuckerberg showed Gayle King around a virtual world would have shown off the metaverse vision more, but would have been harder to shoot for TV, so they settled for a demo that didn’t show much.

Also, nobody is going to want to sit through a long meeting with an Oculus on their face and the controllers in their hands the whole time.

But that's not the point of the demonstration and interview for Facebook. They wanted to give a peek behind the curtain and get people used to thinking of themselves as avatars in a 3D world in more than just games. Now imagine the same demonstration except with a pair of normal looking AR glasses rather than the whole Oculus rig. Zuckerberg takes Gayle King on a tour through virtual Facebook offices where she meets other Facebook employees who show her what they are working on. Both remain anchored to their real surroundings as well as their virtual ones. At that point it is a much more natural experience than what the showed on CBS This Morning.

Ultimately, Facebook wants us living in this world all the time, the way that “Jerry” is living inside Facebook Home in that 2013 ad. Of course being Facebook, they will be tracking users' every move and serving up ads everywhere you look.

Facebook vs. Apple

Both Mark Zuckerberg and Tim Cook think that AR devices will eventually be the thing that displaces the touchscreen smartphone as people’s main device. I tend to agree. So let’s compare what they bring to the table.

Facebook’s main advantage over everyone here is Oculus, which they have owned since 2014. They now have seven years of experience building this hardware, and have five years of data on actual usage in the wild. There are probably something like 2 million Quest 2 units out in the wild, which is a nice sized test group for their AR ambitions.

Apple hopes to close this gap soon with a VR/AR headset in 2022 or 2023. For them, it is more important as a bridge to the AR glasses than as a dedicated platform like Oculus.

But this is where Facebook’s advantage begins and ends.

A phone: The first versions will likely need to be tied to a phone, and Apple can add hardware and software to accommodate that.

Chip design: I expect Facebook will want to remedy this deficit before they launch AR glasses, but Apple gets to design a chip matched to the device, not a general purpose Qualcomm Snapdragon.

Operating system: Again, Facebook may want to remedy this. They are currently using the Android core open source operating system with their own VR/AR libraries laid on top. At some point they will want deeper device integration, though it will likely remain Android based.

The sensor stack: Apple has the most advanced and complete sensor stack of any products company because of iPhone, Watch and AirPods. These include GPS, gyroscopes, accelerometers, altimeters, LiDAR and the U1 ultrawideband chip. Facebook also has plenty of this but not as complete.

On-device voice recognition: This was the biggest announcement this spring at Apple’s Worldwide Developers Conference – that with iOS 15, voice recognition will happen on-device rather than sending recordings to the cloud as everyone currently does. This was a key milestone for Apple, and was made possible by their control of chip design and system software.

Spatial audio: Apple has put a ton of effort in to what is now a minor feature. But the reason for that effort is that it will be key in giving feedback to the user in an AR glasses device.

Finally, Apple is the king of miniaturization. The system-on-package in AirPods Pro is a work of art:

The good news for Facebook is that the have plenty of cash, and are willing to spend to catch up:

Just The Beginning

We are in the very early stage of this, so there will be little near-term effect on either Apple or Facebook except large R&D spends. But both seem to be gunning for a 2025 time frame for these things so they are headed for another collision. There will be room for both products. Apple’s will be premium priced. Facebook’s will be far less expensive, but come with far more ads to make up for it.